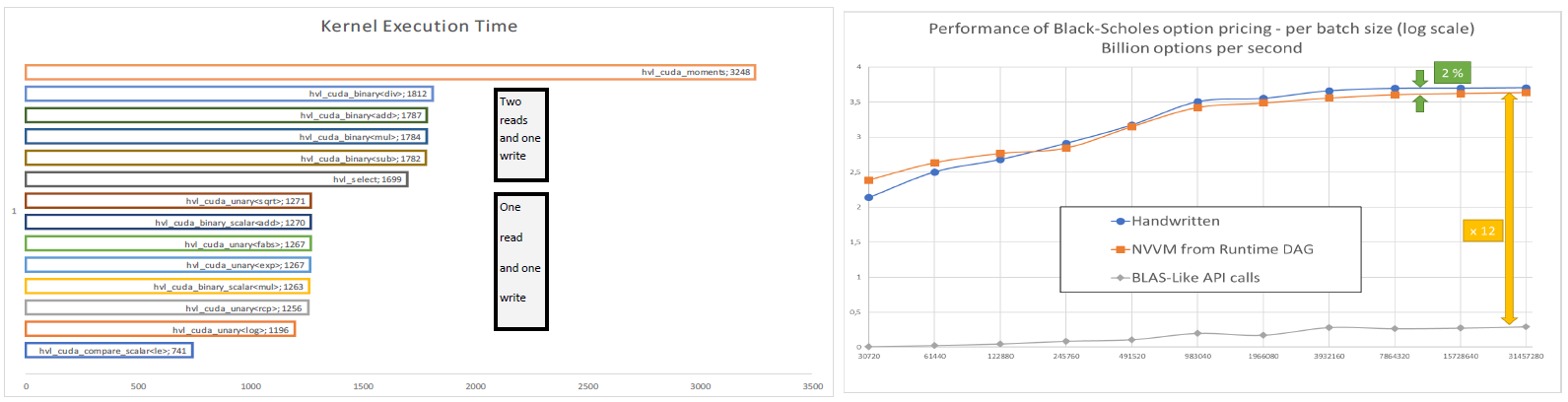

When submitting small tasks to the GPU, grid scheduling and synchronization costs may be much higher than computations, even on a CPU. In this case, the benefit of GPU computing is lost. Leveraging runtime compilation, we illustate an approach that generates source code to replace a list of library API calls into a single kernel call. The benefits are twoflod: (1) scheduling costs are reduced to a minimum, result of merging several calls into a single one, (2) execution on vector of values of an aggregate kernel result in a compute-bound implementation.

Performance Out of the Box on Multicore and Manycore Hardware with Code Modernization

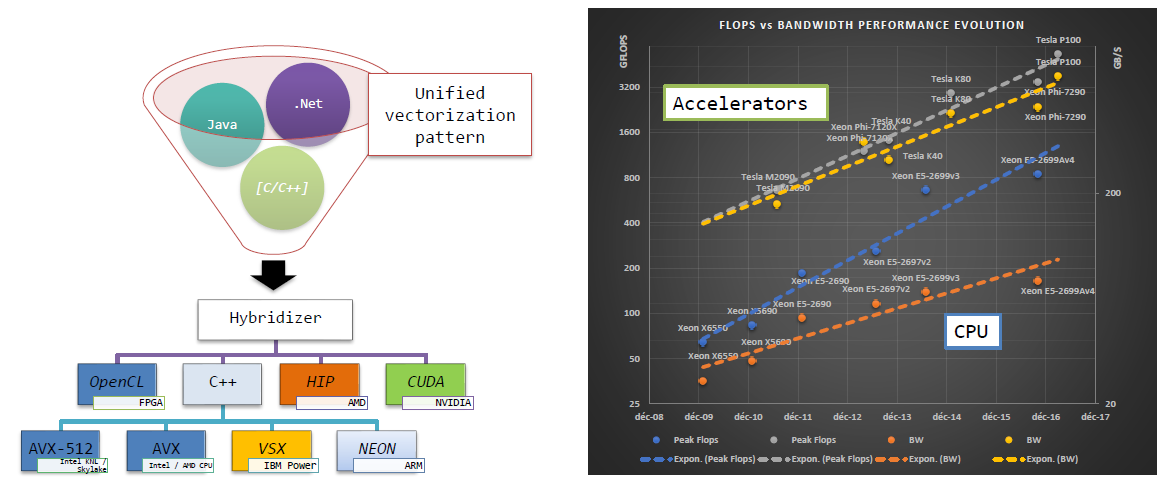

We presented at TES 2017 why code modernization is a requirement to benefit from current and future architectures, as well as how Hybridizer can help modernize code with little effort.

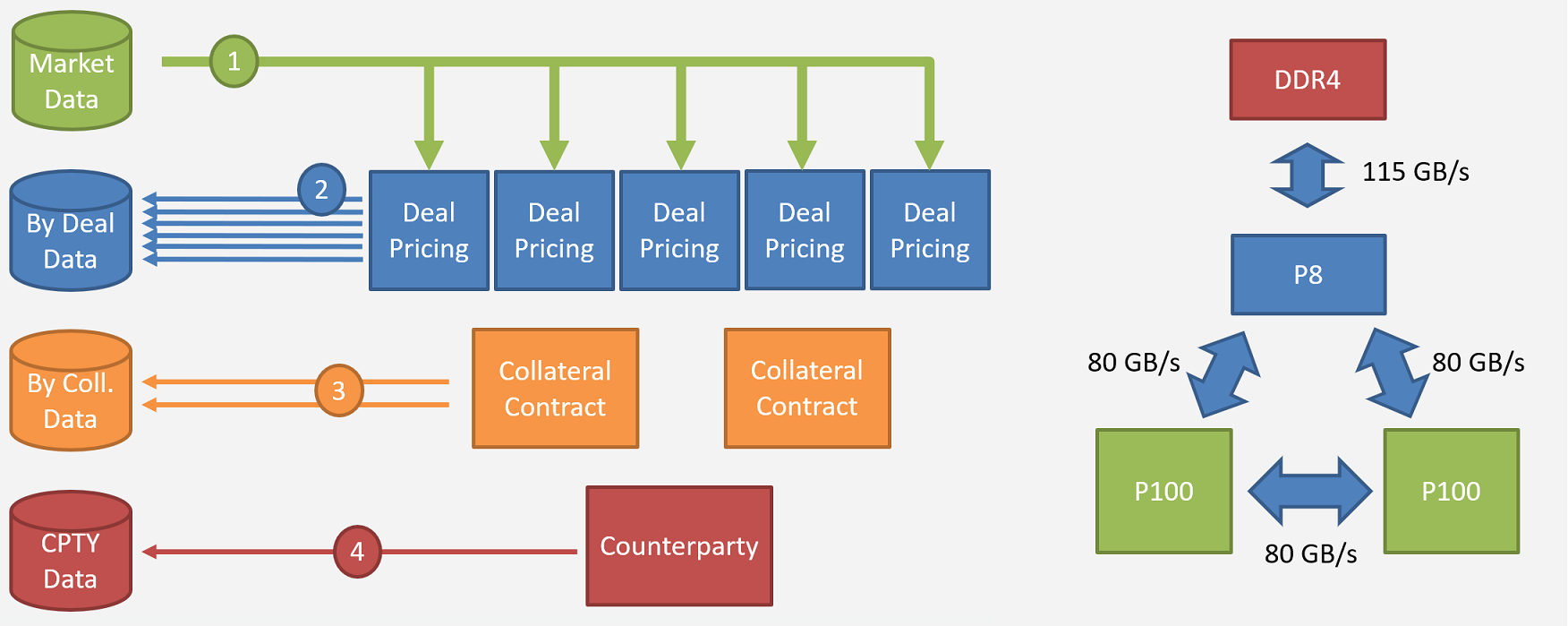

How Pascal And Power 8 Will Accelerate Counterparty Risk Calculations

Since the financial crisis of 2008, regulators have been increasingly demanding in terms of risk analysis and stress scenario simulations. In this talk, we present an approach for counterparty risk calculations based on Directed Acyclic Graphs. Calculations are arranged in a tree, where nodes are simulation parts. Nodes hold temporary data that may be reused for other calculations further in the graph. This technique offers great flexibillity, benefits from hardware capability improvements and is resilient to new regulatory requirements and demands. We will illustrate the potential benefits of Pascal according to performance expectations of NVLink, and how these features are helpful in the DAG compute environment.

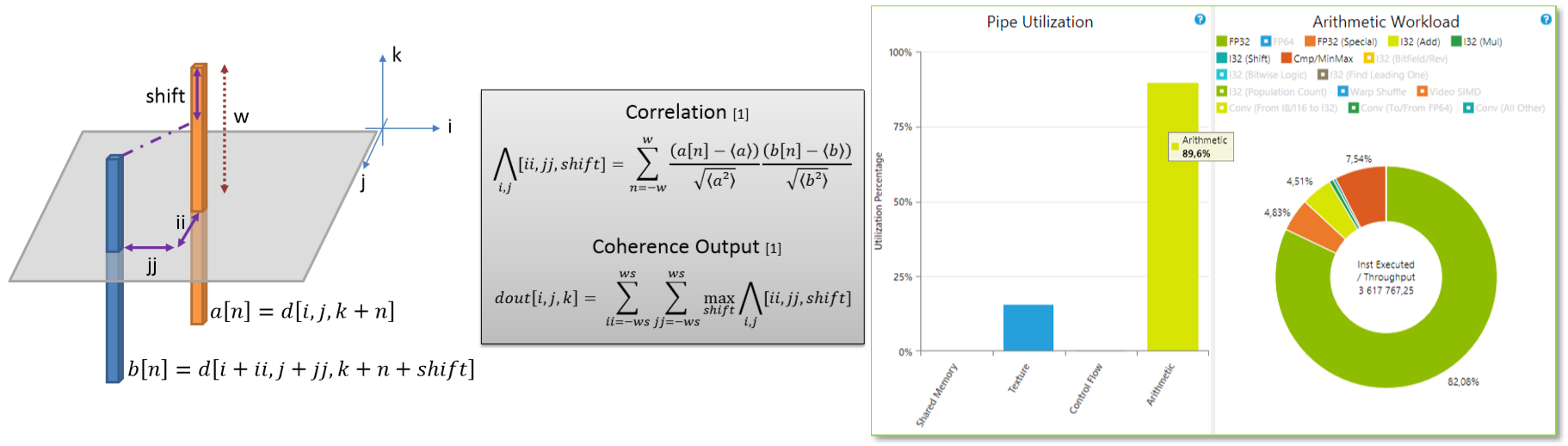

Java Image Processing: How Runtime Compilation Transforms Memory-Bound into Compute-Bound

A wide variety of image processing algorithms are typically parallel. However, depending on filter-size or neighborhood search pattern, memory access is critical for performances. We’ll show how loop reordering and memory locality fine-tuning help achieve best performance. Using Hybridizer to automate Java byte-code transformation to CUDA source code, and using new CUDA feature Run Time Compilation, we transformed execution from memory-bound to compute-bound. Applying this technique to oil and gas image processing algorithms results in interactive response time on production-size datasets.

Presented at GTC 2016 – ID S6314

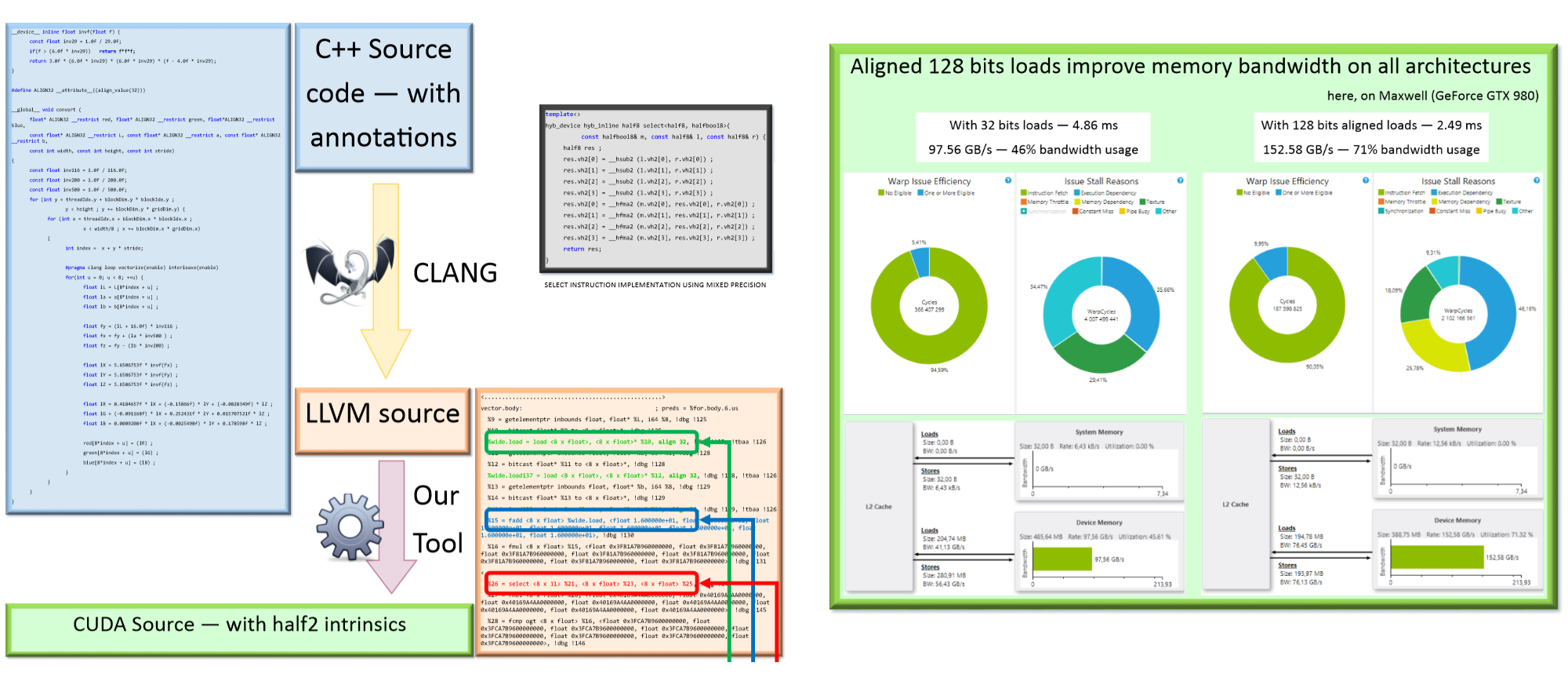

Using CLANG/LLVM Vectorization to Generate Mixed Precision Source Code

At Supercomputing 2015, NVIDIA announced Jetson TX1, a mobile supercomputer, offering up to 1 TFLOPs of compute power for a power envelope typical of embed-ded devices. Targeting image processing and deep learning, this platform is the first available to natively expose mixed precision instructions. However, the new mixed precision unit requires that operations on 16-bit precision floating points are done in pairs. Hence, approaching peak performance level requires usage of the half2 type which pairs two values in a single register.

In this work, we present an approach that makes use of existing vectorization tool developed for CPU code optimization to further generate CUDA source code that uses half2 intrinsic functions, hence enabling mixed precision hardware usage with little effort. Using this approach, we are able to generate efficient CUDA code from a single scalar version of the code.

This source to source code translation may be used in many application fields for different numeric types. Moreover, this approach shows very nice boundary effects such as better memory access pattern and instruction level parallelism.

Altimesh Hybridizer

GPU computing performance and capabilities have improved at an unprecedented pace. CUDA dramatically reduced the learning curve to GPU usage for general purpose computing. The Hybridizer takes a step further in enabling GPUs in other development ecosystems (C#, java, dot net) and execution platforms (Linux, Windows, Excel). Transforming dot net binaries into CUDA source code, the Hybridizer is your in house GPU guru. With a growing number of features including virtual functions, generics and more, the Hybridizer also offers number of coding features for the multi- and many-core architectures, while making use of advanced optimization features like AVX and ILP.

The solution and its features have been presented several times. Here are some events and presentations.

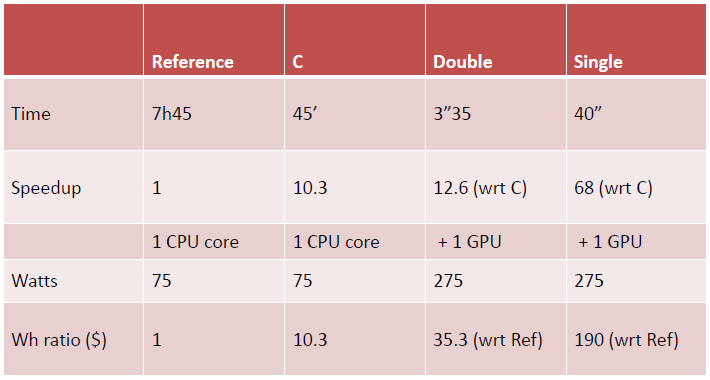

Using GPU for Model Calibration

In this presentation, we discuss benefits of GPUs for financial model calibration. The new implementation together with the hardware architecture benefits result in an overall 190x speedup.

Waters Power 2009 Europe Event took place in London.